TL;DR

Today I learned that bilinear resampling beats bicubic sampling when reducing image resolution.Try it now. Even better, see if it allows you to get the detail into a 512x512 that you previous clung on to a 1024x1024 for.

In the beginning...

It started with a dismissive giggle. A blog post from Wagner James Au over at NWN, made the somewhat surprising claim that a debug setting in Firestorm gave much higher resolution textures.How To Display Extremely High-Res Textures In SL's Firestorm Viewer

"What a lot of nonsense" was my first reaction, after all, everyone knows that images are clamped at 1024 by the server, viewers can't bypass that, because believe me, if they could someone would have done so at some point. But I do try to keep an open mind on things and so along with Whirly Fizzle, the ever curious Firestorm QA goddess we tried to work out what was going on.

The claim

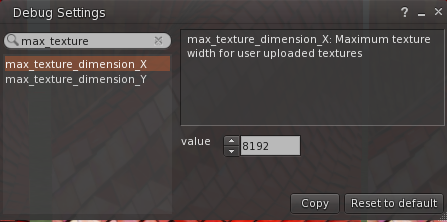

The claim (asserted by SL resident "Frenchbloke Vanmoer" and reported by Wagner) is that by playing with a debug setting in the viewer you can get much better upload results. We automatically get jittery when such claims are made, they are typically spurious and have bad side effects (I refer you to the horrible history of RenderVolumeLODFactor). The post, however, shows evidence from the story's originator; something was clearly happening, this warranted a far closer look.The setting in question is max_texture_dimension_X and Y

I show it here as 8192, as the blog suggests. But the clue to why this is not the true source of the improvement is that the default is 2048, not 1024 as you might expect.

Examining the evidence

It is still February so too early for April fool, but was the user deluding himself with local texture mode, seeing a high resolution that nobody else could see? Whirly did some experimental uploads and confirmed that in her view the user (Frenchbloke) was correct, there was a distinct improvement in the viewer texture if it was uploaded from the 8192x8192 source. How odd.But again you have to be very careful with the science here. When you resize in photoshop, you then have to save the image. If you save as JPG then you leave your compressed image at the mercy of the jpeg compression and the quality settings used by photoshop. In effect, you lovingly craft a texture then crush the life out of it in jpeg compression, the uploader then decompresses it locally, before recompressing into the jpeg2000 format required by Second Life (losing more info in the process). The end result being worse than starting with a large source image and doing the compression one time in the uploader. Problem solved, that must be it, right?

Wrong. Testing with PNG, a lossless format, showed similar results. Resizing in Photoshop and saving lossless, then importing, was worse than letting the viewer do the resizing. No matter how devoted we might be to our Second Life we are not typically inclined to think that the viewer can be doing a better job than a more specialised tool, especially not when we are talking about the industry powerhouse photoshop, but the evidence was suggesting this.

So what does that setting do?

At best nothing at all, at worst very little. It has not explicit involvement in the quality of a texture.

The max_dimension parameter is used to test up front the size of an uploaded image and block anything "too large", but that is all. In fact, it is enforced in the interactive upload dialogue but it is not applied in the "batch upload" so with or without the debug setting it has always been possible to pass oversize images into the viewer and let the viewer "deal with it".Conventional wisdom, however, tells us that that cannot be the right thing, you are giving over control to the viewer.

The max_dimension setting lets you pick a larger file from disk, but the viewer still uploads at 1024 max.

ok, so the setting does more or less nothing, what on earth is going on?

Having ruled out the "double jeopardy" scenario by using a lossless input format, I walked through the upload process in a debugger, testing the theory that perhaps giving a large input resulted in the Kakadu image library that Firestorm and LL both use for handling images doing a better job at compressing than it might when given an already resized image. The theory is sound, more data on input must be a good thing, right?

Wrong. Well, wrong insofar as there is no extra data at that point. This is how the viewer image upload works...

- The user picks a file

- The file is loaded into memory

- It is forced to dimensions that are both a power of 2 and no greater than 1024.

- The preview is shown to the user.

- The user clicks upload

- The (already resized) image is compressed into jpeg 2000

- The result is uploaded.

So..another theory dies because by the end of step 3 we have lost the additional data because we've forcibly resized the image.

Perhaps we have a bug then? Do we think we resize but mess it up?

I examined the early stages of the above sequence, and sure enough, we get to step 3 and it takes your input image, in my case a photo that was about 3900x2800, and forcibly scales it down to 1024x1024. What was even more bizarre was that if the image was already 1024x1024 the code did not touch it (a good thing) which meant that the photoshop resized image was passing directly through to the upload stage in point 6 untainted.

OK... so what is this scaling we are doing?

Perhaps the scaling that we do in the viewer is some advanced magic that gives better results for SecondLife type use cases and is part of some cunning image processing by Kakadu....

Wrong. Very wrong. Firstly, this is not a third party scaling algorithm, the code is in the viewer. and what is more, it is using a straightforward bilinear sampling.

Bi-linear? But that's crap, everyone knows that...Right?

Wrong. It turns out that whilst almost everyone has accepted the wisdom conveyed by Adobe (whose software states "Bicubic Sharpen - best for reduction", very few people have bothered to actually test this. A quick google search shows the conventional thought is deeply ingrained, but halfway down the results is a lone voice of opposition.

http://nickyguides.digital-digest.com/bilinear-vs-bicubic.htm

Nicky Page's post demonstrates quite clearly that bilinear sampling is a more effective reduction technique.

So prove it then.

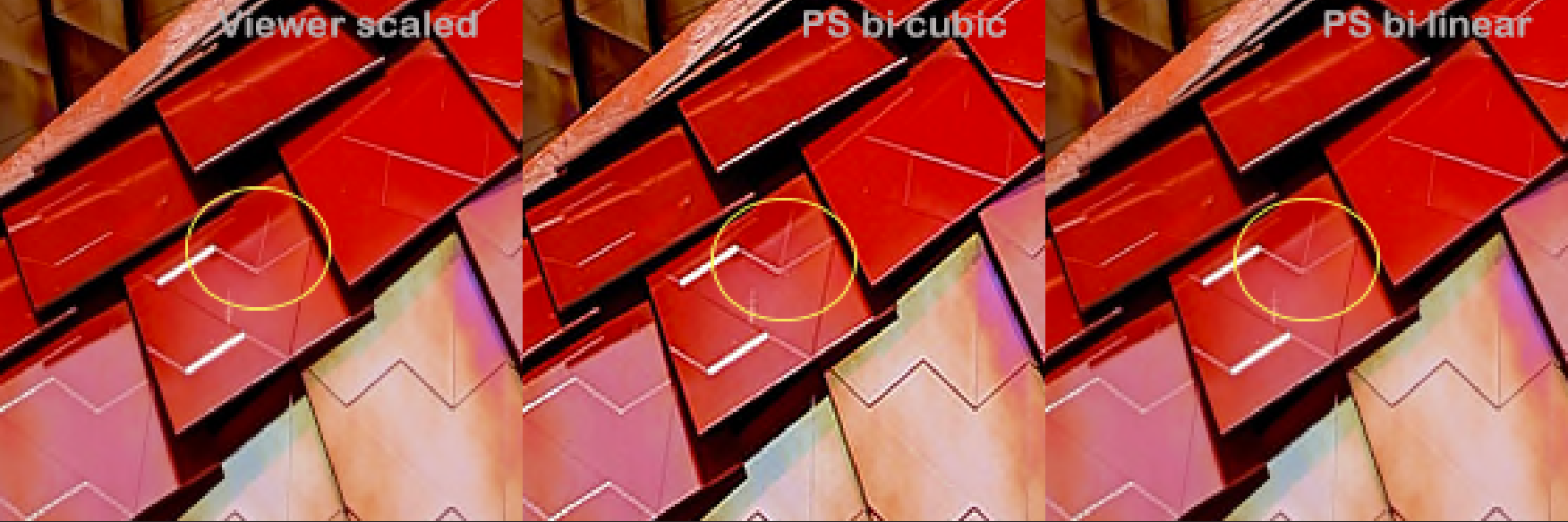

Not one to take anyone's word at this stage I went back to the image that Whirly used, a red tiled roof.I started with the same high-resolution image. I loaded it into photoshop (in this case I used the latest Photoshop CC 2019) and resized it first using the Adobe recommended bicubic-sharper. Then reverting back to the original, resized it using Photoshop's own bilinear algorithm. Both were saved out as Targa (TGA).

I then switched to Firestorm (using the latest build again) set the debug setting as per the original instruction and uploaded 3 textures

- The original texture, allowing the viewer to rescale it.

- The manually rescaled bicubic image.

- The manually rescaled bilinear image.

I then compared them inworld and sure enough, the quality of both 1 and 3 was near identical. The quality of 2, the bicubic, was noticeably lower. Click the image below to see the magnified comparison. (also available https://gyazo.com/14bb5cc13eb45d7124bd0dc1a7caacc0)

Is there a less subjective way to illustrate this?

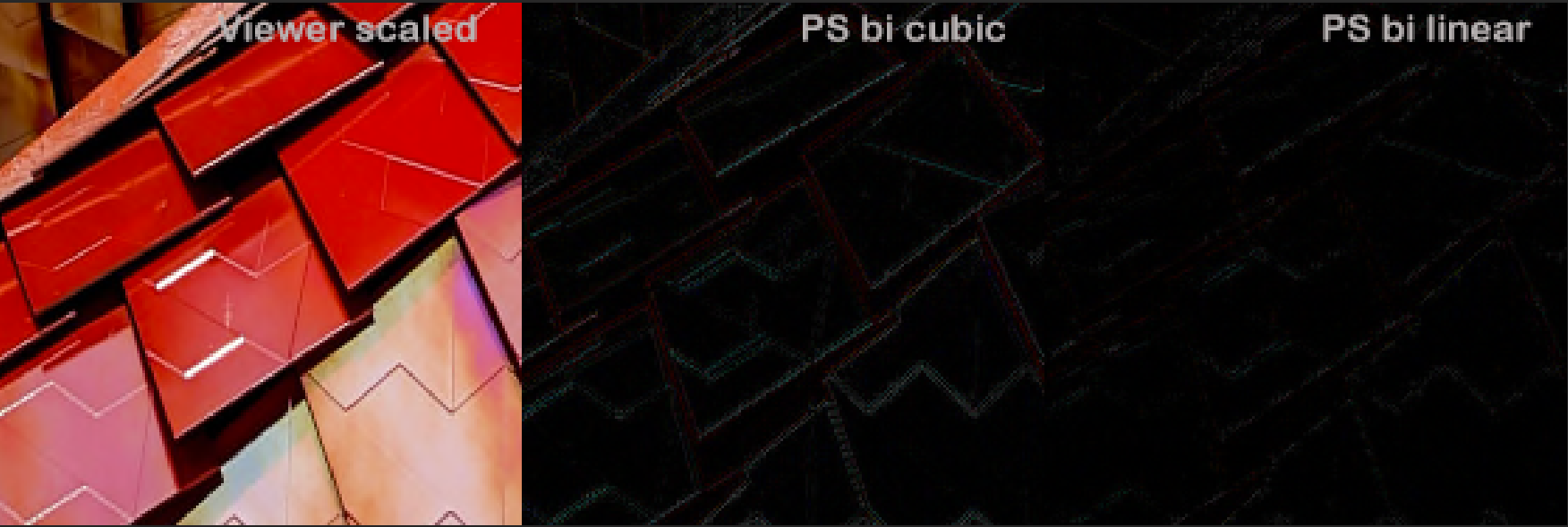

Looking at complex images for areas of blur is not a very scientific approach. I downloaded each of the textures, saving them as lossless Targa (TGA) images.

Loading all three back into photoshop I combined them as layers. Setting the relationship between the layers to subtract allowed me to show where the images differed.

As can be seen, the bi-cubic has a lot more artefacts than the two bilinears. Bi-linear resampling is the way to go it seems.

The observant among you will notice that the two bi-linears still have differences, so which is best?

They have both undergone the roundtrip through jpeg 2000 compression and when I applied the same test to the photoshop bilinear resampled image that had not been uploaded, they both showed very similar amounts of artefacts but in slightly different places. Which leaves the ultimate decision with the uploader I think.

So what have we learned?

Sometimes we learn things from the most unexpected sources. I owe a thank you to "Frenchbloke Vanmoer" for setting us on this path, and to Wagner James Au, for the post that brought this to my attention.

The key lesson here is you don't need debug settings to get better image quality. You need the right algorithm and from there it seems clear that bilinear resampling is the best way to go. A good reason not to use the debug setting is that by doing so you are limiting your improved textures to those that are 1024x1024. The same improvement can be gained for 512x512 and 256x256 if you do so in your photo editing tool of choice.

I hope this was a useful insight into not just how but why this effect is seen and a lesson to all that you should not always take advice at face value, but neither should you dismiss out of hand what seems cranky and illogical at first glance.

Take care

Beq

x

|

| Another example |

This comment has been removed by the author.

ReplyDeleteIt's worth mentioning that using a Bicubic reduction on a normal map texture contributes to the distortion of the data that it represents, which is particularly easy to notice at UV seams where baked normal map data from where (for the sake of argument) the left and right side have to "meet" to form a seamless transition. This seamlessness is never fully accomplished, but using a Bicubic conversion algorithm blends the pixels on either side of the seam differently, making it so that the pixels on the "left" and "right" of the seam no longer align, since those two regions of the normal map aren't analyzed by the algorithm as a unit. This effect is particularly prominent on a normal map where the seam line doesn't follow 90 degree angles. Proper padding can help mitigate this, but it's been a source of near-constant frustration on my part.

Delete