Decompression sickness

It was the compressed byte stream that was throwing out our numbers and, as a result, we will need to reproduce the data and compress it to find the byte streaming cost. This means that we need to look at how the polygons are stored in Blender.

We did a little of this when we were counting the triangles but we barely scratched the surface.

All the data we need is in the bpy.data structure for a mesh object.

The BPY data structure is not very well documented but there is a lot of code around and the excellent blender python console that lets you try things out and features autocomplete.

Given an arbitrary mesh object (obj) we can access the mesh data itself through obj.data

import bpy

obj = bpy.context.scene.objects.active # active object

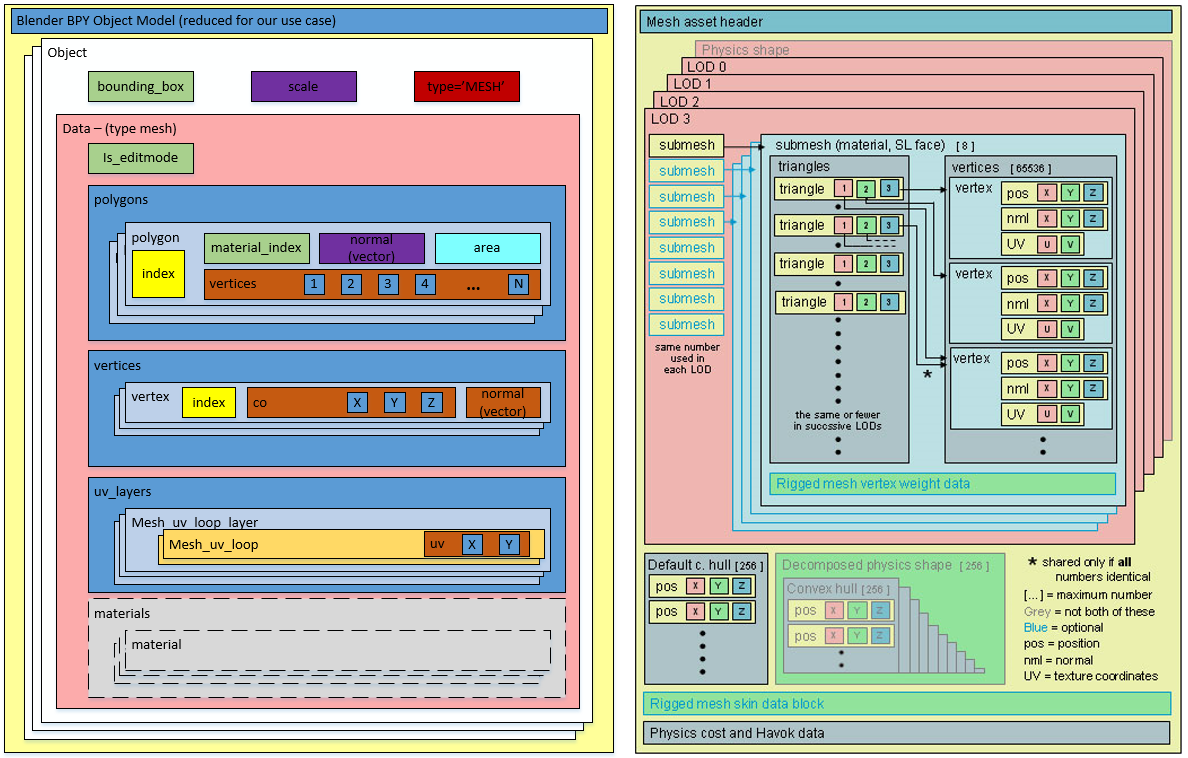

mesh = obj.dataFollowing in the footsteps of the wonderful visualisation of the SL Mesh Asset Format by Drongle McMahon that we discussed in a previous blog I have had a stab at a comparable illustration that outlines the parts of the blender bpy data structure that we will need access for our purposes

|

| On the left, we have my "good parts version" of the BPT datastructure, while on the right we have the SL Mesh Asset visualisation from Drongle McMahon's work. |

- SL Mesh holds all the LODs in one "object". We have multiple objects, one per LOD.

- A Mesh object has a list of polys that index into a list of vertices. SL has multiple meshes, split as one per material face

- SL only accepts triangle, we have Quads and NGons as well.

- Each submesh is self contained, with triangles, UVs, normals and vertices listed. Vertices are thus duplicated where they are common to multiple materials.

- SL data is compressed

So let's sketch out the minimum code we are going to need here.

For each Model in a LOD model set.

Iterate through the polygons, and separating by material

For each resulting material mesh

for each poly in the mat mesh

add new verts to the vert array for that mat. mesh

adjust the poly into triangles where necessary

add the resulting tris to the material tri array

write the normal vector for the triangles

write the corresponding UV data.

compress the block

Having done the above we should be able to give a more accurate estimate.

A lot easier said than done...Time to get coding...I may be some time.

Love

Beq

x

No comments:

Post a Comment