Fantasy Faire is back, so how can we optimise our experience?

Fantasy Faire is one of the most popular annual events on the grid. Featuring stunningly beautiful regions packed with retail delights, alongside art and entertainment events it also draws massive crowds. This heady concoction does not bode well for frame rates. In this blog, I'll go over a few tricks that we can use to improve our performance and enjoyment.

The challenge posed by the Faire is manyfold, we want the best of everything, we want to see the scenery at its best and unlike more mundane shopping events, you often want to see other people. "Watching the Faire folk" is a spectator sport frequently enjoyed by Fairegoers. Observing the visitors in their fun avatars and outfits is as much a part of the Faire as shopping and supporting the charity is. So while, for a typical shopping event, I might suggest disabling avatars entirely or using the "Show Friends Only" function, for the Faire we want to keep as many people visible as possible.

At the same time, we have all these gorgeous worlds that have been built (I can say that without reservation this year as I have taken a year off ;-) ) This means that we want to keep as many features enabled as possible. A perfect storm for lag, and a conundrum for us.

Step 1: Getting yourself ready...

During this blog we'll come back to the new performance floater a number of times so take a moment to locate it. You can find it on the "World" menu, it is also on the "Advanced" menu, as a sub-menu of the "Performance tools", in both cases it is listed under "Improve Graphics Speed". You can also add it to any of your toolbars by opening the Toolbar window (right-click on any toolbar and select "Toolbar buttons...", then pick the "Graphics Speed" button and drag it to the toolbar you want it on.

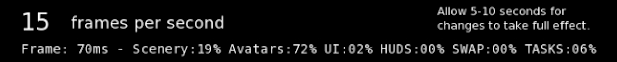

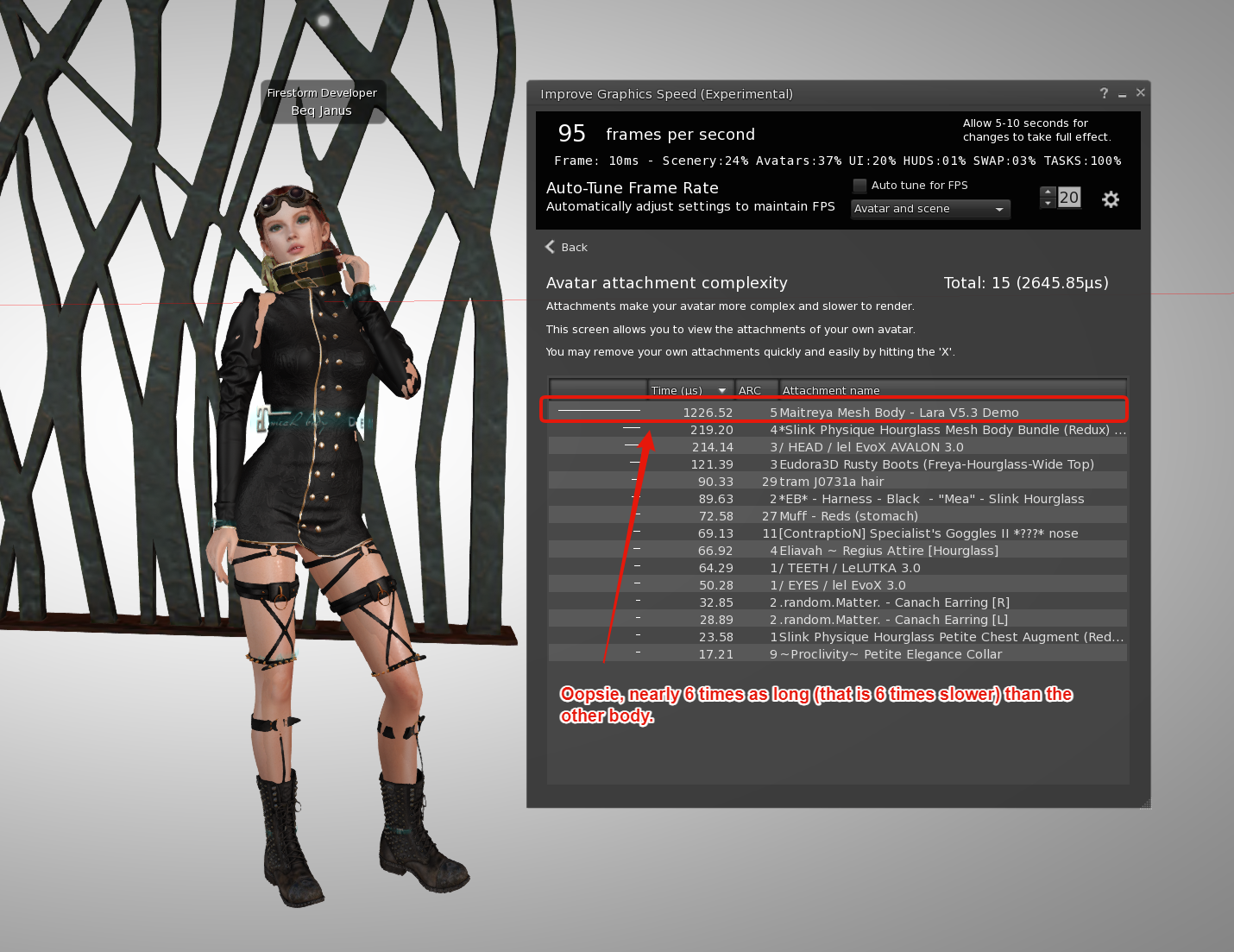

While we are looking at the floater, take a moment to note the top few lines. The FPS needs no explanation really, but the summary line below that is going to help us. The frametime is how long each frame is taking. It should be approximately 1000 divided my the FPS and is measured in milliseconds (a millisecond is 1/1000th of a second).

Look at the UI number. Notice that if you have chat windows and inventory windows open, then you can increase your FPS by closing them, even minimising them will help.

HUDs on the other hand are misleadingly low impact on the FPS. For the most part, they have a comparatively low direct, measurable impact, but if you have your gorgeous, texture heavy HUDs that come with your head and your body, or any other glossy looking HUDs, then remember that those textures are taking up space in your computer's memory and that of your graphics card. This means less space for everything else and it increases the amount of "shuffling around" that has to happen.

As such best practice is to remove all the HUDs that you can; this also has the benefit of reducing the script load on the regions that are already heavily taxed managing countless vendors and other scripts.

Use Firestorm's "favourite wearables" capability to get quick and easy access to those HUDs you need on a more frequent basis. e,g, I keep my SLink HUDs, My Lelutka head HUDs and my AO and others all there for quick access.

You can also hide all HUDs using "Alt-shift-H", though keep in mind that this does not stop their scripts which will be adding to the server load.

Step 2: Set a good example and de-lag yourself.

Before we depart for the Faire, we should do as we would like others to do and reduce our impact on their Faire experience. This means reviewing our outfit(s)

Tip #1 - Try a new look and ditch that laggy body for your visit.

Leave your Maitreya/Legacy/eBody/Signature bodies off until you need them and try a fun fantasy look for a change. Thsi will make you less of a burden on other shoppers in the crowds.

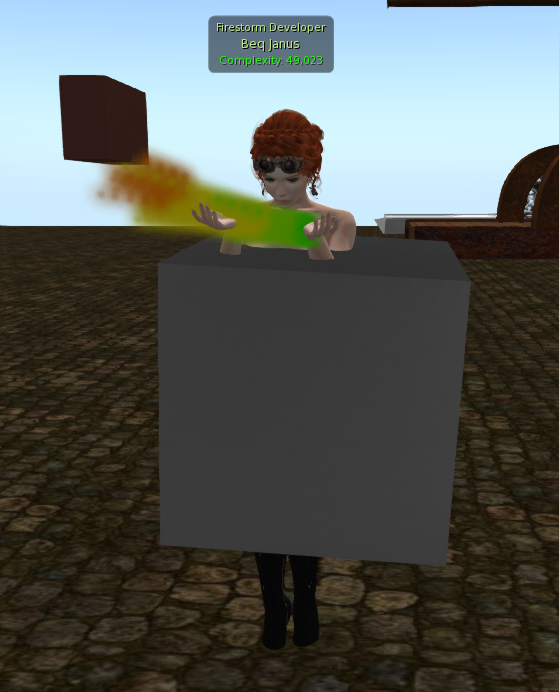

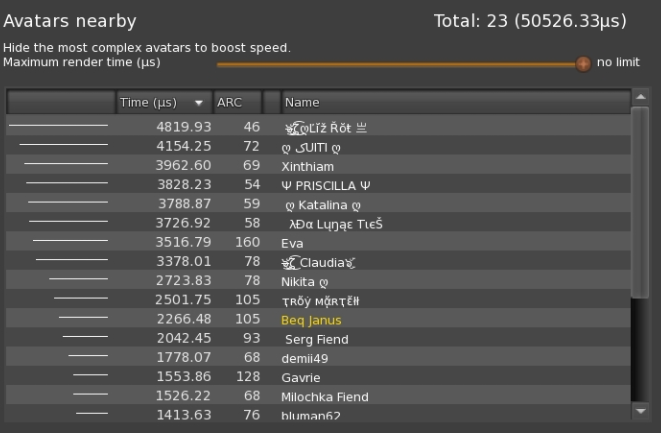

Alpha segmented bodies are a disaster for frame times. Pick a low impact body and why not use the occasion to get in theme. Many fantasy avatars have a very small performance impact by comparison.

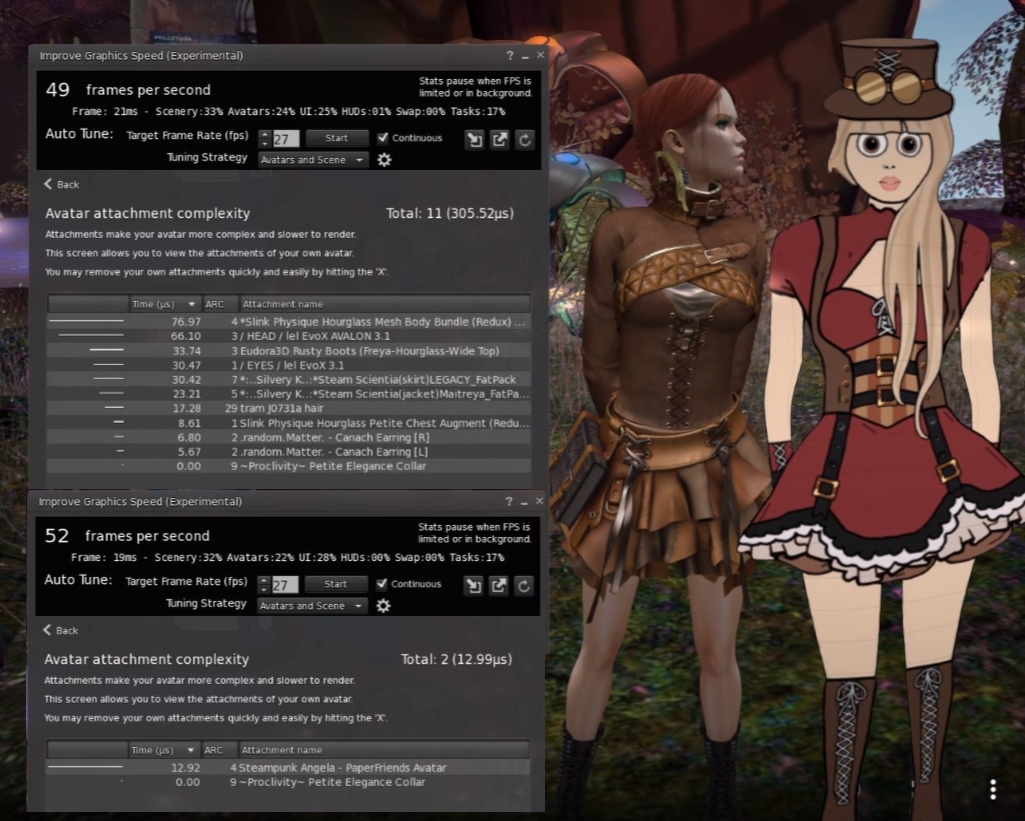

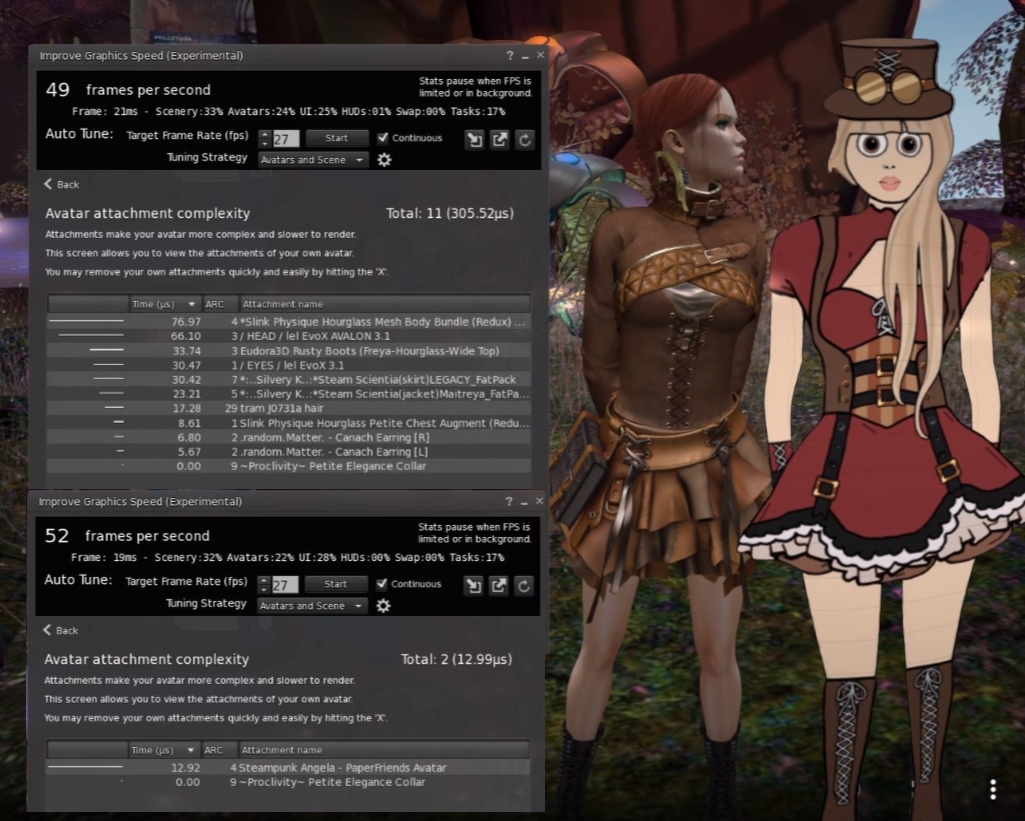

By way of example, here is my normal Avatar, which already uses a low lag SLink Redux body, switching to the fun paperfriends steampunk avatar I bought at the Faire last year.

https://gyazo.com/b1f55c19a6f279a285e4d7548ecdd99d

Even though my SLink body is about 1/8th the render time of a typical "popular" body, this fun foldable me takes just 15 microseconds to render, which means I could have 20+ of these avatars around me for less than the cost of a single eBody reborn.

But, it's not just these extreme low lag avatars; here is a friend demonstrating that size does not matter. This enormous dragon avatar is a fraction of the cost of her regular body choice.

Tip #2 - Use the outfits function in the viewer to set up one or more appearances.

"But I want to shop for my normal body" I hear you cry.

If your typical avatar appearance includes one of the popular but very laggy mesh bodies then consider creating an outfit for this, and one for the low lag fantasy avatars, this will allow you to switch whenever you are trying on your purchases.

Be realistic but be considerate too. Nobody is expecting everyone to just suddenly stop using these poor performing bodies overnight; we're all too attached (no pun intended) to our wardrobes and bodies and we all want to show off our new purchases, but take a moment to review your own render times and if you get the chance, switch to the low lag options and have some unencumbered fun.

Tip #3 - Ignore ARC - it is misleading, wrong and counter-productive.

When assembling your outfits for the event, ignore the ARC and push aside your preconceptions.

The dragon image above is a great example. The Mesh body is 5 times slower to render than the entire dragon, yet the ARC would have you thinking something else. The ARC is just wrong, it is based upon outdated and incorrect assumptions.

So if ARC is bad then what do we use?

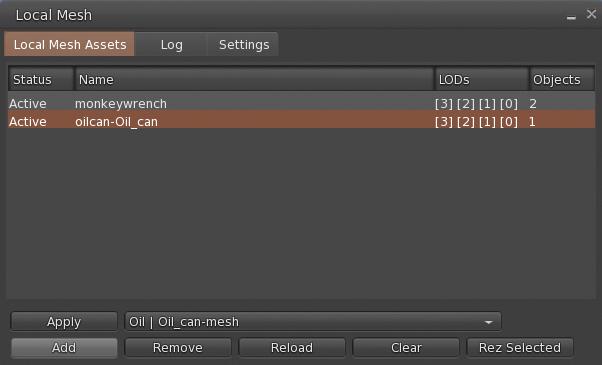

With the latest Firestorm, we have provided a better tool to assess your true impact. The render time, we'll use this to determine which of our attachments are causing the most lag and we can decided to keep them or swap them.

Focus your camera on yourself so that the viewer is showing your avatar, something similar to the photos of me above; then use the "attachments view" of the performance floater to see which of your attachments is the slowest to draw. Try variations, especially with hair, and see how things perform. You may be surprised. As a rule, the worst offenders are typically your body or your hair, or both.

Tip #4 - Make the best of a bad thing

If you are using a segmented mesh body like Maitreya/Legacy/eBody etc. use the HUD to turn off as much of the body as possible; anything that is covered over should be disabled, auto alpha often does a good job but see if you can get rid of more. Every segment that you can set to invisible is a boost in performance for everyone looking at you.

If you are tempted to wear particles, don't, just don't; remove them immediately. They are a considerable burden to rendering. They won't, however, show up in the stats on the current version of the tool as I had no isolated worn particles from those inworld. while you will not see the item render time change, you will see the FPS drop.

You might want to turn down the max particles setting in the graphics preferences too, but don;t for get that particles are often used for effects in the regions.

OK! We've optimised ourselves. We are now ready to go.

Step 3: Quality vs Speed, the managing the balancing act. - Global settings and Scenery

There are some decisions we need to make, we may want things to look their best, and we may want things to run their fastest. Typically we need to find a middle ground that we're happy with.

Your choices in the next few steps will be dictated by your hardware/network and your personal preferences.

For the best looking experience, you want to ensure ALM is on, Shadows are enabled and Water reflections are set to their highest quality. These come at a high cost though. The next few paragraphs will explain more.

ALM (Advanced Lighting Model) aka deferred rendering.

For many people, turning on ALM should have a minimal impact on their FPS, for some ALM may even boost performance. This is because more processing can be moved away from the CPU and onto the graphics card. When testing this, ensure you are not mixing up ALM with shadows. Turn off shadows if they are on, by setting them to "None" in the preferences/graphics panel. Let your FPS stabilise and make a note of it, then turn off Advanced Lighting, wait and let things settle again.

If toggling ALM off and on is having minimal impact on your FPS then I would strongly advise you to leave it on. It is very hard to see the regions as the designers envisaged with ALM turned off.

People who may wish to keep ALM off are those with limited RAM (I would suggest anything less that 8GB is considered limited) and those with slow or metered internet connections.

Shadows

A lot of people think disabling ALM gives them a massive performance boost, often though this is simply because doing so also kills shadows. Shadows are a major source of render time, they are also, of course, a major aspect of good lighting and atmosphere. Rendering shadows requires that every object gets drawn from the perspective of the sun and other lights, this overhead means that shadows at least double or even triple the amount of time it takes to draw a scene.

Keeping shadows on or off can depend on what your objective is. If you want nice photos then shadows are imperative, if you just want to experience the region as the designer intended then, again, you need the shadows. However, some lighting doesn't cast distinctive shadows and yet the effort to draw them is the same so consider creating a graphics preset with shadows and without so that you can quickly switch back and forth.

Water reflections

Water in SL looks lovely when all the full reflections and refractions are being drawn, but for technical reasons, even when you cannot see the water all those little ripples and highlights are still being drawn.

The ripples and reflections mean that every object that might possibly be reflected is redrawn, the more detailed and cluttered the region (meaning the more "stuff" there is) the more work is being done for the water, even if you cannot see the results of that work.

If you are in a region where the water is not visible, or if you do not care too much about the quality of the water, then set Water reflections to None:Opaque.

In general, it is worth taking a moment to use the performance floater and experiment with different settings to see the impact. In my experience, there is little to no performance difference between the top-level water reflections and the so-called "minimal", however, it does depend on how much "stuff" is around. Slipping all the way down to "None:Opaque" will grant you a significant FPS boost.

Create a preset with good reflections and no reflections so you can switch easily.

Step 4: Quality and Quantity

If the above three options are all about quality of rendering then the defining factor is the number of objects that the viewer is having to draw.

Draw Distance

The next tool we have is one we are all probably aware of, the Draw Distance. Draw Distance limits how far ahead we can see. Inside a city scene we can often afford to relax and shorten that distance while in the countryside, or out on the open plains we need to see further. By selecting a sensible DD we limit the amount of "stuff" that the viewer has to deal with this can have a dramatic effect in a busy scene.

Max non-imposters.

Whilst this is technically an avatar setting and we'll talk more about those shortly, it behaves similarly to DrawDistance. When we are in a crowd we can limit the number of avatars that are being drawn at full detail using this setting, but what people often do not realise is that the viewer draws the closest Avatars first. Thus if we set the max NonImpostors to 10, the viewer will draw the ten closest avatars in all their glory but use the imposters (flat cutouts) for those further away. With Imposters being carefully managed you can often improve scene rendering times considerably when things get crowded.

We have now covered the major settings that have a direct impact on the quality/speed balance.

Play with these settings and create yourself a set of graphics presets that you can switch between as best suits the region you are in, and the activity you are enjoying.

Step 5: Friends, family, strangers and lag

At the top of this blog, we walked through choices that we might make to optimise our appearance. Some of you will have followed them; others will have resolutely refused to reconsider your choices :-)

Irrespective of your decisions, we will find many others that have chosen to stay as they were or *shockingly* have not even read this blog ... I know, it's hard to believe, right?

We've made the best choices we can about our scenery; what can we do to protect ourselves against laggy crowds?

We have been trained (erroneously) to use complexity to limit those we render. Complexity still has a role in blocking the extremely slow, but as we observed before, using ARC for any purpose has far too many false alarms and makes people take the wrong decisions.

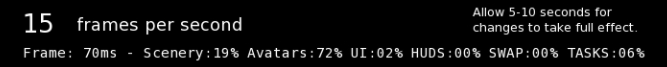

With the newest Firestorm, we now have render time. I've discussed the background to Render time in earlier blogs, but what we can do now is use render time to automatically force laggy avatars to behave better.

On the Avatars nearby tab, we see the Maximum render time slider. You can use this to manually set a cutoff. What this value should be will depend on your hardware. You can get an idea by going to a club, an event or a busy store and seeing how long typical avatars take to render.

The render time limit allows the viewer to take some avatar specific actions. If your machine is capable enough and you decided to render shadows in the early sections, you'll find that avatars have a massive impact on your FPS because every avatar takes up to 3 times longer to draw than it would without a shadow. Using the render time limit, we can set the threshold so those complex and slow avatars (yes, those with the segmented bodies, did I mention them yet?) have their shadows unilaterally disabled, allowing you to still render them fully, but without the shadows, a much better visual option than the horrible grey mess of a jelly doll.

If an avatar exceeds your threshold once its shadows have been disabled, the viewer will create an impostor image. Unlike the JellyDolls, this impostor is typically fully detailed and, from a distance, looks relatively normal. The most noticeable effect is that they will animate far slower, and if you get up close, they will look pixelated.

Use the limit carefully, and remember to set it back to high/unlimited when you want because (in this initial release of the feature, I have not included a simple reset button (it's on the TODO list)

While there is no magic wand that I can wave to make things run faster, and in the end, your machine can only do so much, following these tips will help you understand the impact things have and how you can limit and control them.

Final words - Autotune and defaults.

In closing, I should mention the AutoTune feature.

The new Autotune capability of Firestorm is my first attempt to bring some degree of automation to the settings discussed above. Based upon the scene and the target FPS that you ask it to achieve it will tune things such as the water reflections, the draw distance, and the avatar render time. It was specifically designed with crowded events in mind, and it will aim to keep the visual quality of the scene as high as possible using just the controls we have discussed here.

It has been working very well for many people, but for a few, it can be frustrating and a little disconcerting to have their settings changing as they walk around. You'll need to make your own choices.

If all else fails and you end up with graphics settings that seem confused or broken, simply ensure that autotune is disabled, and click the "reset to defaults" button on the performance floater.

Have fun; I hope this helps you get a little more out of your machine and a less laggy Fairelands experience.