OK, buckle up; this one is going to be a long one.....

We all know the deal, you go to a shopping event, and you wade through a tar pit of lag until you can click the "render friends only" button and remove all the avatars.

More Avatars = More Lag

Why do avatars cause so much load?

If you look through the blogs and forums, you'll find that there is much conjecture over the causes, and you can easily find an "expert" who'll explain to you the problem of onion skin meshes, triangle counts, poor LODs, and invisible duplicate meshes to name but the most common.

As with many myths and pseudo-scientific speculation, there is an air of plausibility, and often enough, a grain of truth. However, while many of these may contribute to lag, the hard experimental evidence points to something so large that it eclipses them all.

We'll examine each of these "usual suspects" as an appendix at the end of this post. For now, let's cut to the chase.

The number one cause of lag is...Alpha cuts

Alpha cuts? You know that nice little convenience feature, the one that lets you hide parts of your body? The ones where over the years, people have nagged and pushed for more and more detail in the alpha slices. Every one of those little areas is a "submesh", and (as I'll explain) these are the number one cause of avatar-induced lag without any shadow of a doubt. Until BOM, of course, it was not a convenience feature; it was the only way to alpha a mesh body. A requirement because of a shortcoming that dates back to the first use of mesh bodies. But these days, we have BOM, and for the majority of uses, this same alpha effect can be accomplished with more precision and far more efficiently using an alpha layer.

Why are these specifically such a problem?

Every mesh object in SL is made up of one or more "submeshes". Each submesh represents a texture face on that model (allowing it to be coloured, textured, or made invisible independently from the rest.)

A mesh object can have at most 8 texture faces (submeshes); after that, if we need more independent faces, we have to add another object and start to add faces to that, repeating until we are done.

In the viewer rendering pipeline, every submesh results in a separate package of data (known as a drawcall) being sent to the GPU (this parcel of goodies gets unwrapped by the GPU and used to draw part of the final image that will appear on your screen).

This is important because "drawcalls" have a substantial overhead. You can picture it like this.

We have a production line in our living room, making little sets of triangles and sending them off to our client (the GPU). We cheerfully pack triangles into a box, along with all the necessary paint and decorations needed to make them look pretty, wrap them up securely, put a bow on top, walk to the post box, and pop the box in the mail to the GPU.

How long does this take us?

If we break this process down, we find that packing the triangles themselves is remarkably quick; we can pack 10,000 triangles rapidly (for illustration, we'll say 5 seconds). But packing it into the box with all the other paraphernalia and walking to the post box with it takes an awful lot longer (let's say 5 minutes just for this illustration). In fact, it takes so much longer that it doesn't really matter how many triangles we are cramming into the boxes; the time spent dispatching them will dwarf it.

If we had a mesh body of 110,000 triangles to send to the GPU. Placing it in one large box will take us,

11 x 5 seconds to pack triangles in a box = 55 seconds (let's call it 1 minute)

1 x 5 minutes to send that box. = 5 minutes

The total time to send our body was 6 minutes.

If instead, we chop up the body and send it out in 220 separate parts:

11 x 5 seconds to pack the triangles = 55 seconds (it is the exact same number of triangles)

220 x 5 minutes = 1100 minutes

Total time to send our body is now 1101 minutes, or 18 hours and 21 minutes.

That Mesh body your avatar is wearing is very likely to be in the Render time equivalent of hours rather than minutes. We'll be looking at some "real" numbers next time.

To put this in context, here are some "typical" numbers collected in an in-world survey, jumping around places in SL.

| Body | Total faces | average visible faces | # times slower than best in class (higher is worse) |

| Maitreya Lara | 304 | 230 | 12.78 |

| Legacy | 1471 | 340 | 18.89 |

| Belleza Freya | 1116 | 190 | 10.56 |

| SLink HG redux | 149 | 30 | 1.67 |

| Inthium Kupra | 83 | 18 | 1.00 |

| | | |

| Signature Geralt | 903 | 370 | 8.22 |

| Signature Gianni | 1159 | 431 | 9.58 |

| Legacy Male | 1046 | 174 | 3.87 |

| Belleza Jake | 907 | 401 | 8.91 |

| Aesthetic | 205 | 205 | 4.56 |

| SLink Ph. Male BOM | 97 | 45 | 1.00 |

Notes:

I quote the average number of visible faces instead of the total faces because the drawcall cost of fully transparent "submeshes" is avoided in almost all viewers. This number varies depending on the outfits worn, so it is only fair to judge by the "typical" number visible during our sampling.

As you can see, we are all paying a remarkably high price for the convenience of alpha slicing.

Bodies are, of course, the number one offender, closely followed by certain brands/styles of hair and heads.

Don't blame the body makers.

Let's be clear why we have ended up this way. It is the lack of a clear understanding of how bad this was. In this ignorance, many designers, body makers, and most importantly, you and I, the customers, have not only let this continue, but we have also encouraged it. Demanding more cuts, more flexibility. I've seen blog posts and group messages written out of complete ignorance, asserting that "[body creators] who have forced their users to adopt BOM and removed alpha cuts" had "got it wrong".

No, they got it right; this was entirely what was hoped for; it's just that people were not ready for it, and arguably the tooling and support were not either.

So what now? How do I get a lower lag body? I like my wardrobe

Write to the CSR of your favourite body and ask for a cut-free edition.

Let's face facts. We all like our wardrobes, and we don't like to give that up, and we don't necessarily need to. The same body, the same weights, can work without the cuts. None of your clothing goes to waste, though you will need alpha masks to take the place of those HUD-based alphas and the "auto-alpha" scripts.

If enough of us ask for an efficient version, then one of two things will happen:

EITHER:

The body makers you contact will provide uncut versions alongside the cut versions and share some of the knowledge as to why the uncut ones are better.

I sincerely hope that they will; in the end, this ought to be less painful for them - they have to spend a lot of time slicing those models up and tweaking things.

OR:

New bodies will fill the gap for performance, and slowly people will move over. We already have two female cut-free bodies for those willing to move.

There is, of course, a second part to this. Those lower lag bodies need alpha layers, and clothing creators need to start offering alpha layers; with the recent success of the Kupra body and this finally happening anyway, and over time this will help make clothes more flexible as they will no longer have to "cut the cloth" to where the alpha cuts are. For older clothes, it is worth noting that many outfits do not need an alpha; many more can work with standard off-the-shelf alphas. So all those old outfits are probably fine. What is more, if you place the alpha layers into the outfits folder with the clothing, then they will be automatically worn and replaced.

Finally, remember, this is not do-or-die. We can still choose to wear an older laggy body when we want a specific older outfit that we haven't managed to get an alpha for and for which standard alphas do not work.

This is all very well, Beq, but what about X?

There are undoubtedly a bunch of you going, yep, but my XYZ outfit needs blah and what about this corner case over here?

None of those are going away. You will still have the choices. If there is an apparent reason why you need to have a more complex body, then nobody is stopping you. All I am doing here is waving a red flag to highlight just how much damage this "convenience" function is doing.

For example, time and again, people raise the "oh but I must have onions cos BOM has no materials", The appendix talks about this. If you need onion skins, you can have them, but if you have them on a 12 mesh body, you'll now have 24 meshes. If you have them on a 240 mesh body, you'll now have 480... kill the cuts. After that, I'll moan about onion skins, but it'll have far fewer teeth :-)

If we can move to a saner "normal" where bodies aren't made up of hundreds of tiny fragments, then we can all afford to have extra onion skins for our materials, etc., without breaking the bank.

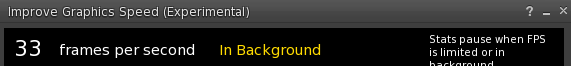

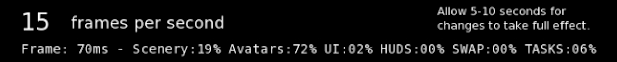

Can't the viewer make this better?

Not entirely, no. In the course of investigating this, I have identified a few things that can be optimised. But, even if I make the rendering cost of tiny meshes more efficient, all we do is move the scale a little; things would still be 10 or more times slower, and more importantly, we are still wasting time doing a largely pointless activity.

In the long term, the picture does change a bit. One day, we are promised that the viewer will migrate to a more modern pipeline; when we reach that point, the overhead of these so-called "draw calls" will be diminished, and the argument may flip back towards total triangles, etc.

However, that is not yet. In fact, that is likely to be a good couple of years away, and keep in mind that some people do not even have a machine that can run a modern rendering pipeline.

To put it bluntly, we can act, all of us, me, you and your friends, we can act now, to fix this problem and move to a far more sane world where we can go to large events without having to disable all our settings. Or we can wait and hope things get better naturally before everyone abandons SL for being a laggy swamp and goes someplace else.

Finally, there are a few "nice to haves" that we could really do with a mix of viewer features and server-side features.

1) An easier way to make alphas; this is something I have proposed to the lab in a

Jira feature request but is not currently on the "book of work".

2) We also need a few UI/LSL tweaks to allow HUDs to wear/remove alphas without things like RLV.

3) Perhaps this is really #1. We need solid, reliable tools that tell us, both as creators and most importantly as users, whether an item is efficient or not.

But none of these is required for things to start moving forward. But at the same time, all of these "nice to haves" are entirely doable. Nothing here is out of reach.

Come on then, Beq, prove your point.

OK, so there's a lot of blame being assigned here, mostly to ourselves as users, so I'd better have a good argument to back this up... I think I do, so before you flame me in the comments, let me show you why. My next post will share some empirical data gathered through weeks of performance testing that supports my arguments; I'll show you the extensive testing done and explain how you might try to do something similar to see it for yourself.

UPDATE: Part 2 is now online - warning statistics ahead 9/10 of you will be bored the other half will love it.

Appendix

But what about ...?

Here's a quick appraisal of the typical perspective on lag. The high-level summary is that most of these are valid observations and identify some form of inefficiency. Whether they contribute to FPS loss is another question, and until we get rid of the massive issue around draw calls, their effect is moot.

The poor LODs and poor LOD swapping:

There are several issues here that get conflated, and while each is a valid problem, they do not, on the whole, impact your rendering performance (hear me out on this).

For a long time now, I have been ranting about how poor LODs affect the quality of our lives in SL. This has not changed; moreover, the bugs that affect Rigged mesh rendering mean that they rarely get shown even when a creator has provided good LODs.

So that's two issues,

1) Creators do not provide proper LODs

2) The LODs are not shown.

Number one is moot if number 2 is not fixed and number 2 is not being fixed by the lab because of number 1. That is to say that if we make rigged mesh behave as it "should" (thus fixing point 2), then your clothes would vanish very quickly because of point 1, which leads to grumpy people.

We are, as they say, between a rock and a hard place...

But does it affect the FPS? Yes, to some extent. If you have a high detail LOD that is being drawn at a distance where most of the triangles resolve to a small number of pixels, then the GPU has to shade each vertex, and this can mean that a pixel in the final render is being shaded more than once, this is known as "overdraw". It means that your GPU is doing a lot more work than it needs to. Simply put, if every pixel on your screen was shaded once and then had to be done against, then clearly, it takes twice as long as shading just once would. This is a great example of a GPU bottleneck, which we rarely see in SL due to the draw call problems that dwarf everything else. These are real problems; they are just hidden from view right now.

So it's those feet, those invisible feet!!!?

Or increasingly "OMG those lag inducing multi-style hairs".

You'll see this on many blogs that discuss complex bodies and the impact of rendering, and to be fair, this is entirely plausible, and I have believed it myself. However, tests prove otherwise.

Due to limitations in the Bento body, we do not have usable toe bones nor the morph targets that would allow us to distort the foot meshes to fit our shoes. As a result, we have multi-pose feet on our bodies.

Once upon a time, I used to wear SLink single pose feet; I would wear the flat feet in a sandals outfit and the high feet in a high-heels outfit, etc. At some point, market pressure for convenience won out, and body makers started to package all the feet together. Now, when I am wearing my feet, the mesh will be many feet, all bundled up. If I have 6 poses for my feet, then 5 of these will be fully transparent. Leaving just the one set visible.

We also see a similar trend in hair; rigged hairs with "style HUDs" that allow us to alter the appearance.

I am as guilty as anyone for thinking that this causes significant (wasted) effort and thus lag in the viewer. Undoubtedly, there is overhead; let's not ignore that all of this data has to be downloaded, unpacked, and held in RAM...This is all wasteful, BUT it is not a significant contributor to the rendering lag, which is what we are focussed upon today; this is primarily because most if not all viewers now have an optimization in place that prevents the rendering of fully transparent meshes (thanks I believe to NiranV Dean's nifty optimisation).

A problem for the future? Probably. A serious issue right now... No, there are far bigger fish to fry.

Is it Triangle counts?

OK, this is the high-poly elephant in the room, I guess.

"OMG, XYZ body is 500,000 triangles. What a nightmare, no wonder it lags me out."

This, as it turns out, is highly subjective, and while triangles ultimately do impose a certain load, it does not at the present time matter anywhere near as much as it should.

There are so many uninformed, technically inept, or just plain lazy creators out there who throw absurdly dense meshes into SL* and as with the poor LODs above, they are undoubtedly a source of lag and of load, causing overdraw and unnecessary, or at least inappropriate, data storage and transfer. This is predominantly going to manifest as GPU load, with a side-helping of RAM pressure and cache misses.

Thus, the full answer is more subtle and depends a little on your computer. In general, if you have a dedicated graphics card (GPU), then the chances are it doesn't matter that much in terms of FPS right now. Remember our box packing antics? We need to pack an awful lot of triangles before it starts to approach the cost of dispatching the box. If you have a machine that relies on the onboard graphics, then the story is slightly different, but even then, the number of triangles (within reason) is not the number one cause of lag.

I'll illustrate this more clearly when we get to the benchmarks and numbers (which may be tomorrow's post)

* In their defence, creators (clothing creators in particular) are under pressure to deliver new content at a high rate and for surprisingly low returns (for many). If you consider the amount of real-world time that has to go into producing an item and then consider the Second Life price and shelf life, you can start to appreciate that corners are cut to meet demands. So, once again, we are in part to blame; we, the consumers, do, after all, create the marketplace and the demand. Far too many of us accept inefficient content, and perhaps more importantly, we limited tools to identify well-made, efficient content

Is it Onion skin meshes?

"So.. it's those damnable onion skins, I knew it."

Well, yes and no, The onion skins are indeed one of the contributors, but they are to some extent guilty by association with the actual FPS murderer. Every onion skin is another draw call, and each onion skin layer is a complete skin; it doubles the number of drawcalls. But doubling 6 meshes into 12 is not anything like as much of a problem doubling 60 into 120 or 120 into 240. Every onion layer you add to your body made of N meshes, you add another N drawcalls.

It is for this reason that people who moan at me, "Oh, but we must support materials, and we need layers", get an unconcerned shrug. If we lived in a world where all our bodies were 10 meshes. If some people need to have 20, then that's fine. You are not going to notice, especially when most people will have them empty. Moreover, you could have onion layers as an optional wearable. Thus avoiding the cost for the majority of us and giving optionality to the others. Ironically, Maitreya detached the onion layers in just this manner and got nothing but grief for it!

End of Appendix.